在配置以及使用的ingress-nginx 之前应该先了学习并了解k8s的服务发现原理,以及常用的服务器发现规则

提供:https://kubernetes.github.io/ingress-nginx/

https://github.com/kubernetes/ingress-nginx/用于学习了解ingress-nginx内容以及对应k8s的版本情况,建议使用github的地址去下载yaml 文件

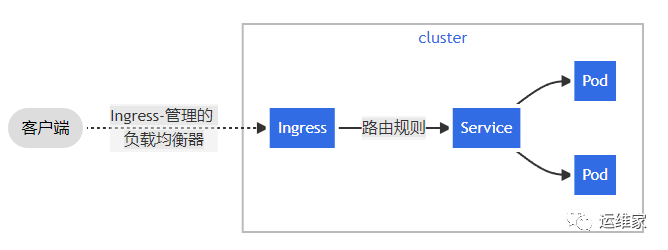

在了解了k8s 的通信方式后,最后集群外访问集群内的时候,就需要用ingress 将域名信息或者ip转发到对应服务ip

ingress 可以提供负载均衡、ssl终结基于名称的虚拟托管

一、了解ingress,什么是ingress

Ingress 开放了从集群外部到集群内服务的 HTTP 和 HTTPS 路由,流量路由由 Ingress 资源上定义的规则控制。

Ingress 可为 Service 提供外部可访问的 URL、负载均衡流量、终止 SSL/TLS,以及基于名称的虚拟托管。Ingress 控制器通常负责通过负载均衡器来实现 Ingress,尽管它也可以配置边缘路由器或其他前端来帮助处理流量。

Ingress 不会公开任意端口或协议。将 HTTP 和 HTTPS 以外的服务公开到 Internet 时,通常使用 Service.Type=NodePort 或 Service.Type=LoadBalancer 类型的 Service。

二、ingress yaml文件配置以及优化

[root@k8s-master01 ingress-nginx]# kubelet --version

Kubernetes v1.19.16由于我的kubernets版本是v1.19.16,根据官网我可用使用 最新的v1.2.1nginx-ingress-controller,

ingress的下载链接如下:

-

国内需要将:对应image 镜像修改为对国内的如:

registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.2.1

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1 -

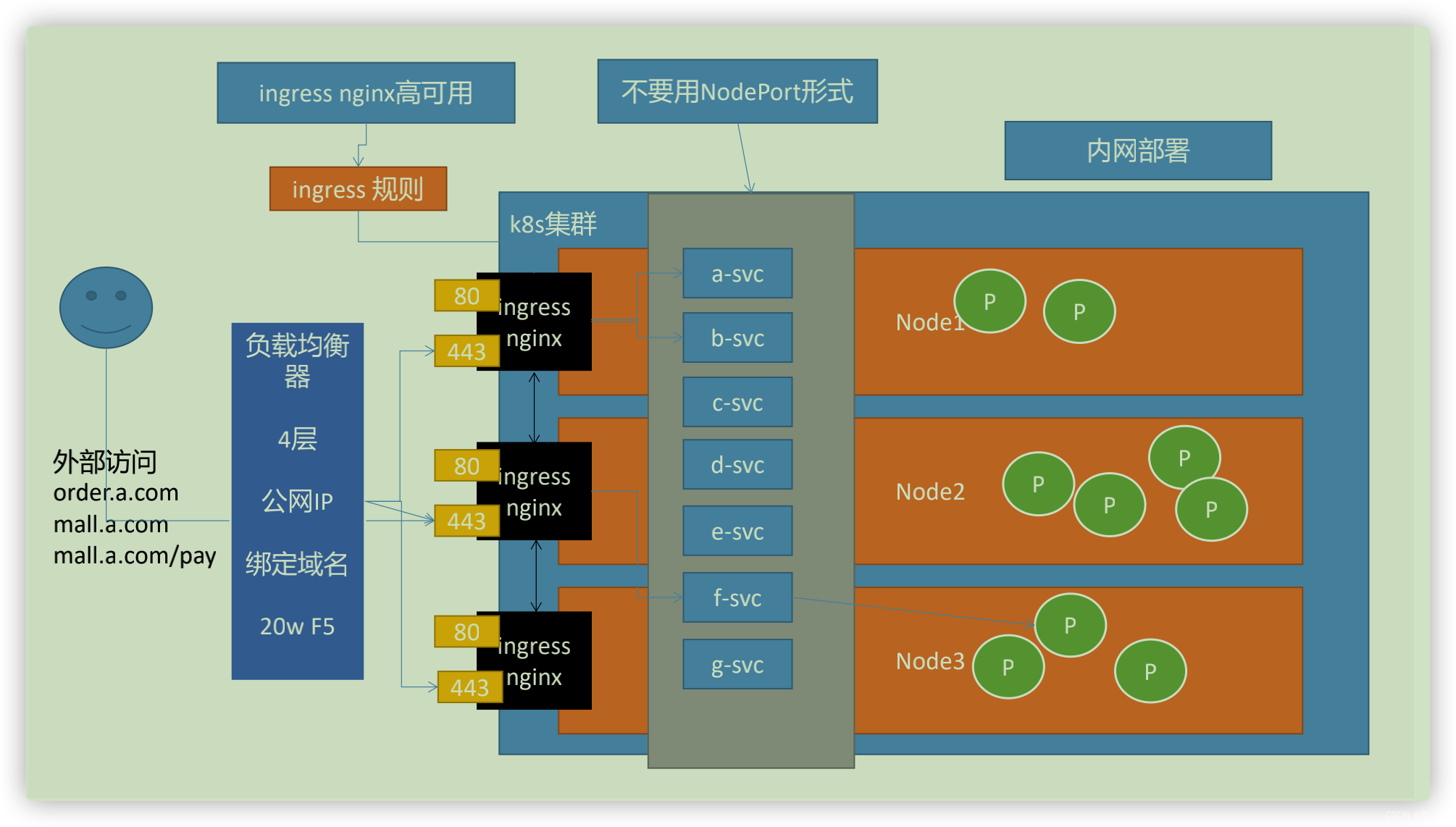

修改Deployment为DaemonSet比较好,因为DaemonSet可以自动为每一台需要安装的机器都安装一份(使用标签控制);

-

Container使用主机网络,对应的dnsPolicy策略也需要改为主机网络的 (dnsPolicy: ClusterFirstWithHostNet)

-

修改Container使用主机网络,直接在主机上开辟 80,443端口,无需中间解析,速度更快 (hostNetwork: true)

-

修改Service为ClusterIP,无需NodePort模式了

-

修改DaemonSet的nodeSelector: ingress-node=true 。这样只需要给node节点打上 ingress-node=true 标签,即可快速的加入/剔除 ingress-controller的数量

优化后的文件下载地址:https://blog.buwo.net/public/jyl-containers/ingress-controller.yaml

二、安装ingress-nginx

自建集群使用裸金属安装方式

2.1 优化ingress的 ymal 文件

需要如下修改:

-

修改ingress-nginx-controller镜像为 registry.cn-hangzhou.aliyuncs.com/google_containers/ingress-nginx-controller:v0.46.0;

-

修改Deployment为DaemonSet比较好,因为DaemonSet可以自动为每一台需要安装的机器都安装一份(使用标签控制);

-

修改Container使用主机网络,直接在主机上开辟 80,443端口,无需中间解析,速度更快;

-

Container使用主机网络,对应的dnsPolicy策略也需要改为主机网络的;

-

修改Service为ClusterIP,无需NodePort模式了;

-

修改DaemonSet的nodeSelector: ingress-node=true 。这样只需要给node节点打上 node-role=ingress 标签,即可快速的加入/剔除 ingress-controller的数量

node-role: ingress #以后只需要给某个node打上这个标签就可以部署ingress-nginx到这个节点上了

2.3 在k8s上部署ingress-nginx

检查k8s 集群master以及node节点80和443端口使用情况

[root@k8s-master01 ingress-nginx]# ss -ntpl|grep 80

[root@k8s-master01 ingress-nginx]# ss -ntpl|grep 443使用kubectl apply部署对应的yaml文件:

[root@k8s-master01 ingress-nginx]# kubectl apply -f ingress-controller.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

daemonset.apps/ingress-nginx-controller created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created给需要的node节点上部署ingress-controller:

因为我们使用的是DaemonSet模式,所以理论上会为所有的节点都安装,但是由于我们的selector使用了筛选标签:node-role=ingress ,所以此时所有的node节点都没有被执行安装;

[root@k8s-master01 ingress-nginx]# kubectl get ds -n ingress-nginx

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

ingress-nginx-controller 0 0 0 0 0 node-role=ingress 5m9s当我们需要为所有的node节点安装ingress-controller的时候,只需要为对应的节点打上标签:node-role=ingress

[root@k8s-master01 ingress-nginx]# kubectl label node k8s-node01 node-role=ingress

[root@k8s-master01 ingress-nginx]# kubectl label node k8s-node02 node-role=ingress查看k8s 上ingress-nginx的信息

[root@k8s-master01 ingress-nginx]# kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-628r6 0/1 Completed 0 104d

pod/ingress-nginx-admission-patch-w2js2 0/1 Completed 0 104d

pod/ingress-nginx-controller-s6nx6 1/1 Running 4 104d

pod/ingress-nginx-controller-tthn5 1/1 Running 3 104d

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller ClusterIP 10.97.116.179 <none> 80/TCP,443/TCP 104d

service/ingress-nginx-controller-admission ClusterIP 10.108.175.254 <none> 443/TCP 104d

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/ingress-nginx-controller 2 2 2 2 2 node-role=ingress 104d

NAME COMPLETIONS DURATION AGE

job.batch/ingress-nginx-admission-create 1/1 1s 104d

job.batch/ingress-nginx-admission-patch 1/1 1s 104d

[root@k8s-master01 ingress-nginx]# kubectl get pod -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-628r6 0/1 Completed 0 105d 10.244.58.200 k8s-node02 <none> <none>

ingress-nginx-admission-patch-w2js2 0/1 Completed 0 105d 10.244.58.201 k8s-node02 <none> <none>

ingress-nginx-controller-4ht4c 1/1 Running 0 28m 192.168.232.71 k8s-node01 <none> <none>

ingress-nginx-controller-m5vk4 1/1 Running 0 28m 192.168.232.72 k8s-node02 <none> <none>

到这里就部署成功了

三、使用ingress-nginx

先创建一个验证测试的Deployment

#namespace

apiVersion: v1

kind: Namespace

metadata:

name: tomcat-demo

labels:

name: tomcat-demo

app: tomcat-demo

---

#deploy

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-demo

namespace: tomcat-demo

spec:

selector:

matchLabels:

app: tomcat-demo

replicas: 2

template:

metadata:

labels:

app: tomcat-demo

spec:

hostname: k8s-node01

containers:

- name: tomcat-demo

image: registry.cn-hangzhou.aliyuncs.com/liuyi01/tomcat:8.0.51-alpine

ports:

- containerPort: 8080

---

#service

apiVersion: v1

kind: Service

metadata:

name: tomcat-service

namespace: tomcat-demo

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: tomcat-demo验证这个pod 可以正常通过svc 访问:

curl -l 10.102.44.103 到在master 中能正常访问 clusterip ,后面就是通过ingress 把域名指向对应的svc

###

[root@k8s-master01 test]# cat tomcat-demo-ingress.yaml

apiVersion: extensions/v1beta1 #可替换成networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: tomcat-demo-ingress

namespace: tomcat-demo

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: www.ericejia.net

http:

paths:

- path: /

backend:

serviceName: tomcat-service

servicePort: 80 # 不过需要注意大部分Ingress controller都不是直接转发到Service

# 而是只是通过Service来获取后端的Endpoints列表,直接转发到Pod,这样可以减少网络跳转,提高性能

[root@k8s-master01 test]# kubectl apply -f tomcat-demo-ingress.yaml

[root@k8s-master01 test]# kubectl get ingress -A

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

tomcat-demo tomcat-demo-ingress <none> www.ericejia.net 80 9m8s

由于一下特性

| 特性 | extensions/v1beta1 |

networking.k8s.io/v1beta1 |

networking.k8s.io/v1 |

|---|---|---|---|

| 状态 | ❌ 已弃用 | ❌ 已弃用 | ✅ 稳定版 |

| K8s 版本 | v1.14+ 弃用 v1.22+ 移除 | v1.19+ 弃用 v1.22+ 移除 | v1.19+ 稳定 |

| path 配置 | 简单格式 | 简单格式 | 结构化格式 |

| pathType | 不支持 | 不支持 | 必需字段 |

| 向后兼容 | 无 | 无 | 长期支持 |

networking.k8s.io/v1 版本和networking.k8s.io/v1 上文中有extensions/v1beta1版本

kubectl delete ingress -ntomcat-demo tomcat-demo

[root@k8s-master01 test]# kubectl get ingree -A

error: the server doesn't have a resource type "ingree"

####networking.k8s.io/v1 版本

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: tomcat-demo-ingress

namespace: tomcat-demo

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: www.ericejia.net # 将域名映射到 my-nginx 服务

http:

paths:

- path: /

backend:

service:

name: tomcat-service # 将所有请求发送到 my-nginx 服务的 80 端口

port:

number: 80 # 不过需要注意大部分Ingress controller都不是直接转发到Service

pathType: Prefix # 而是只是通过Service来获取后端的Endpoints列表,直接转发到Pod,这样可以减少网络跳转,提高性能

补充说明pathType:

Exact: 精确匹配路径。如 path: /foo,只匹配请求路径与之完全相同的 /foo。

Prefix: 前缀匹配路径。如 path: /foo,匹配请求路径以 /foo 开始的所有路径,如 /foo、/foo/bar 等。

ImplementationSpecific: 特定 Ingress controller 的匹配方式。如 Nginx Ingress controller 忽略路径类型,作

在 networking.k8s.io/v1 API 中,pathType 必须是首字母大写的格式

三种写法均证明可以行。

记录下报错问题:

MountVolume.SetUp failed for volume "webhook-cert" : secret "ingress-nginx-admission" not found

kubectl get secret ingress-nginx-admission -n ingress-nginx

.......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 10m default-scheduler Successfully assigned ingress-nginx/ingress-nginx-controller-s4dtb to k8s-node01

Warning FailedMount 10m (x2 over 10m) kubelet MountVolume.SetUp failed for volume "webhook-cert" : secret "ingress-nginx-admission" not found

Normal Pulled 10m kubelet Container image "registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.2.1" already present on machine

Normal Created 10m kubelet Created container controller

Normal Started 10m kubelet Started container controller

Normal RELOAD 10m nginx-ingress-controller NGINX reload triggered due to a change in configuration通过查询一共有两种办法

方案一:

此时需要执行如下命令,删除对应的admission,以为之前我安装失败后重新刷新部署并没有删除对应的验证器配置,所以会导致上面问题。

kubectl get ValidatingWebhookConfiguration

kubectl delete -A ValidatingWebhookConfiguration ingress-nginx-admission

kubectl apply -f tomcat-demo-ingress.yaml验证重新创建ingress 绑定域名是可行的

更多 ingress-nginx 高级用法可以参看阿里的文档:

https://help.aliyun.com/zh/ack/serverless-kubernetes/user-guide/advanced-ingress-configurations#58e42304f4ccc

Comments | NOTHING